Google and Neural Networks: Now Things Are Getting REALLY Interesting,….

Google and Neural Networks: Now Things Are Getting REALLY Interesting,…

Back in October 2002, I appeared as a guest speaker for the Chicago (Illinois) URISA conference. The topic that I spoke about at that time was on the commercial and governmental applicability of neural networks. Although well-received (the audience actually clapped, some asked to have pictures taken with me, and nobody fell asleep) at the time it was regarded as, well, out there. After all, who the hell was talking about – much less knew anything about – neural networks.

Fast forward to 2014 and here we are: Google recently (and quietly) acquired a start-up – DNNResearch – whose primary purpose is the commercial application and development of practical neural networks.

Before you get all strange and creeped out, neural networks are not brains floating in vials, locked away in some weird, hidden laboratory – ala The X Files – cloaked in poor lighting (cue the evil laughter BWAHAHAHA!) but rather high level and complicated computer models attempting to simulate (in a fashion) how we think, approach and solve problems.

Turns out there’s a lot more to this picture than meets the mind’s eye – and the folks at Google know this all too well. As recently reported:

Incorporated last year, the startup’s website (DNNResearch) is conspicuously devoid of any identifying information — just a blank, black screen.

That’s about it; no big announcement, little or no mention in any major publications. Try the website for yourself: little information can be gleaned. And yet, looking into the personnel that’s involved we’re talking about some serious, substantial talent here:

Professor Hinton is the founding director of the Gatsby Computational Neuroscience Unit at University College in London, holds a Canada Research Chair in Machine Learning and is the director of the Canadian Institute for Advanced Research-funded program on “Neural Computation and Adaptive Perception.” Also a fellow of The Royal Society, Professor Hinton has become renowned for his work on neural nets and his research into “unsupervised learning procedures for neural networks with rich sensory input.”

So what’s the fuss? Read on,…

While the financial terms of the deal were not disclosed, Google was eager to acquire the startup’s research on neural networks — as well as the talent behind it — to help it go beyond traditional search algorithms in its ability to identify pieces of content, images, voice, text and so on. In its announcement today, the University of Toronto said that the team’s research “has profound implications for areas such as speech recognition, computer vision and language understanding.”

This is big; this is very similar to when Nicolai Tesla’s company and assets / models (along with Tesla agreeing to come along) got bought out by George Westinghouse – and we all know what happened then: using Tesla’s Alternating Current (AC) model, the practical development and application of large-scale electrical networks on a national and international scale took place.

One cannot help but sense that the other Google luminary – Ray Kurzweil – is somehow behind this and for good reason; assuming that we’re talking about those who seek to attain (AI) singularity, neural networks would be one viable path to undertake.

What exactly is a neural network and how does it work? From my October 2002 URISA presentation paper:

Neural networks differ radically from regular search engines, which employ ‘Boolean’ logic. Search engines are poor relatives to neural networks. For example, a user enters a keyword or term into a text field – such as the word “cat”. The typical search engine then searches for documents containing the word “cat”. The search engine simply searches for the occurrence of the search term in a document, regardless of how the term is used or the context in which the user is interested in the term “cat”, rendering the effectiveness of the information delivered minimal. Keyword engines do little but seek words – which ultimately becomes very manually intensive, requiring users to continually manage and update keyword associations or “topics” such as

cat = tiger = feline or cat is 90% feline, 10% furry.

Keyword search methodologies rely heavily on user sophistication to enter queries in fairly complex and specific language and to continue doing so until the desired file is obtained. Thus, standard keyword searching does not qualify as neural networks, for neural networks go beyond by matching the concepts and learning, through user interface, what it is a user will generally seek. Neural networks learn to understand users’ interest or expertise by extracting key ideas from the information a user accesses on a regular basis.

So let’s bottom line it (and again from my presentation paper):

Neural networks try to imitate human mental processes by creating connections between computer processors in a manner similar to brain neurons. How the neural networks are designed and the weight (by type or relevancy) of the connections determines the output. Neural networks are digital in nature and function upon pre-determined mathematical models (although there are ongoing efforts underway for biological computer networks using biological material as opposed to hard circuitry). Neural networks work best when drawing upon large and/or multiple databases within the context of fast telecommunications platforms. Neural networks are statistically modeled to establish relationships between inputs and the appropriate output, creating electronic mechanisms similar to human brain neurons. The resulting mathematical models are implemented in ready to install software packages to provide human-like learning, allowing analysis to take place.

Understand, neural networks are not to be confused with AI (Artificial Intelligence), but the approach employed therein do offer viable means and models – models with rather practical applications reaching across many markets: consumer, commercial, governmental and military.

And BTW: note the highlighted sections above – and reread the paragraph again with the realization that Google is moving into this arena; you’ll appreciate the implications.

But wait; there’s more.

From the news article:

For Google, this means getting access, in particular, to the team’s research into the improvement of object recognition, as the company looks to improve the quality of its image search and facial recognition capabilities. The company recently acquired Viewdle, which owns a number of patents on facial recognition, following its acquisition of two similar startups in PittPatt in 2011 and Neven Vision all the way back in 2006. In addition, Google has been looking to improve its voice recognition, natural language processing and machine learning, integrating that with its knowledge graph to help develop a brave new search engine. Google already has deep image search capabilities on the web, but, going forward, as smartphones proliferate, it will look to improve that experience on mobile.

So, let’s recap: we’re talking about:

* a very large information processing firm with seriously deep pockets and arguably what is probably one of the largest (if not fastest) networks ever created;

* a very large information processing firm working with folk noted for their views and research on AI singularity purchasing a firm on the cutting edge with regard to neural networks;

* a very large information processing firm also purchasing a firm utilizing advanced facial and voice recognition.

I’m buying Google stock.

What’s also remarkable (and somewhat overlooked; kudos to TechCrunch for noting this) is that Google had, some time ago, funded Dr. Hinton’s research work through a small initial grant of about $600,000 – and then goes on to buy out Dr. Hinton’s start-up company.

Big things are afoot – things with tremendous long-term ramifications for all of us.

Don’t be surprised if something out in Mountain View, California passes a Turing Test sooner than anybody expects.

For more about Google’s recent purchase of DNNResearch, check out this article:

To read my presentation paper on neural networks and truly understand what this means – along with some of the day to day applications neural networks offer, check out this link:

http://www.scribd.com/doc/112086324/The-Ready-Application-of-Neural-Networks

The Mind Meld: It’s Actually Here and It’s Real

The Mind Meld: It’s Actually Here and It’s Real.

Here’s a chance to say goodbye to those students loans,…!

To learn more, check out this post.

The Mind Meld: It’s Actually Here and It’s Real

In an article appearing in Nature.com, scientists actually created a method by which two separate minds can readily communicate and share their insights via electronic means.

In an article appearing in Nature.com, scientists actually created a method by which two separate minds can readily communicate and share their insights via electronic means.

Scientists connected two rats – one located in Brazil and the other in North Carolina, United States – and linked them via a brain-to-brain interface (BTBI) connected across the Internet. What one rat learned was shared with the other – in this case, by pulling a specific level and earning a reward, one lab rat was able to share this information with the other lab rat. The result was a 95% or greater accuracy rate which was far better than if the rats weren’t connected at all.

Before you get overly excited, however, let us understand something: this approach is surgically invasive and thus we can’t readily expect folks to have this kind of thing on the streets overnight.

But what this experiment did prove was that it is possible – and that given time, a new means of training and education (as but one example) can be offered. Remember those ‘Matrix’ films – more specifically, the parts where the protagonist Neal logs on the network and is able to ‘learn’ to do things simply through electronic means? You get the idea.

Other studies suggested that the brain – in close coordination with the body – can indeed learn and guide the body – via mental routines – acts which normally would not be readily done through ‘normal’ means (i.e., repetition, practice and physical action). In some studies, it’s been suggested we learn as we dream, reviewing the days prior events and going over what we’ve experienced in an effort to better cope with our surroundings.

So much for student loans, eh? Plug me in, you say.

Wait a minute; don’t hold your breath just yet. It’s going to take a while before we develop the means to create non-invasive means to connect our brains between ourselves. And given that our brains are substantially more complex than a rat’s, this is going to take a little time because where and how we wire up our brains is going to be another key determinant in making this a reality for people.

Minor issues of morality and invasive technology aside, all of this begs a number of questions – such (as but one) somebody’s gotta know how to do things, and unless you actually do them, how else will that knowledge be passed on from brain to brain? Does knowledge and practical experience fade over time, like badly Xeroxed copies? Or can it be passed on and on without end?

Imagine the legal liability of folks learning how to drive a car, only to also replicate the same quirks and ticks that the original ‘learned’ mind practiced and having it passed on and on (I can hear it now: ‘you drive just like your great-great-great grandfather!’).

Do emotions, wishes and desires from our subconscious also get passed on to others without our knowing it – like viruses and trojans passed between computers programs and files?

Could people, in time as they undergo these processes, be subconsciously controlled to certain beliefs without their knowledge, making them into ‘good little citizens’ trained to not question authority?

Or be trained as soldiers to act in specific ways and means without their full realization, learning to hate without reason and function with no fear at the cost of their lives?

Also, another very important point to ask is that potentially we could learn more quickly, but what of retaining that knowledge? In the long run, is it better to undertake this approach or is it better to do the tried and true route of repetition and practice to ensure that what we learn retains within our heads?

And what happens to us as a society when, over time, we learn more from the machines as opposed to learning on our own?

What kind of people do we become whereby the majority of our learning and experience is attained through plug-in modules and not thorough our own efforts?

These are just but some questions we need to consider before we start opening up those ‘educational centers’ in strip malls whereby we come on down, walk in the door, place our credit card on the counter and learn how to be brain surgeons.

Growing up, there was a saying installed within us as students while we memorized the multiplication tables and Shakesperean sonnets: the mind is a muscle – it must be exercised.

I recall one sonnet:

Nothing either good or bad, but thinking makes it so.

(Hamlet speaking with Rosenkrantz and GIldenstern; kind of ironic when you read the context that this quote is derived from,…)

Here is the link to learn more about this groundbreaking discovery: http://www.nature.com/srep/2013/130228/srep01319/full/srep01319.html

More Overlooked News: Even Cheaper TV’s and Batteries Lasting for Days

Like many kids, I’ve always liked taking things apart – especially when it came to stuff with bulbs, circuits and whatnot. Odds were I could never put it back together again, but the idea of playing around with neat stuff like abandoned televisions, radios or other circuitry always appealed to me (have soldering gun will travel; and don’t get me started on the fun of those old Estes Rockets,…!).

Like many kids, I’ve always liked taking things apart – especially when it came to stuff with bulbs, circuits and whatnot. Odds were I could never put it back together again, but the idea of playing around with neat stuff like abandoned televisions, radios or other circuitry always appealed to me (have soldering gun will travel; and don’t get me started on the fun of those old Estes Rockets,…!).

Fast forward to the present day where a lab accident utilizing this very same attitude may have very well changed our future.

Late last year, researchers in UCLA led by chemist Richard Kaner were just wrapping up a means of devising an efficient method for producing high-quality sheets of the supermaterial known as graphene with a consumer-grade DVD drive (!!!!) when somebody made an accidental discovery. One of the researchers, Maher El-Kady, wired a small square of their high quality carbon sheets up to a lightbulb when something really cool happened. As it turns out, Kaner and El-Kady had stumbled upon an energy storage medium with revolutionary potential, akin to filling your smart phone with a long-lasting charge in just a couple of seconds – or charging up an electric car in a minute.

Uh, er, agh, what?!?!???

A lot happened in that last paragraph, so let’s back up now:

1) These guys developed a new manufacturing methodology for mass producing Graphene (which is not an easy thing to do under the usual process) just by using a regular DVD player to conduct a form of laser inscribing (this is roughly akin to making diamonds by using a typical steam iron);

2) In the process of making this ground breaking discovery, these guys also just stumbled upon finding a new and potentially VERY powerful means of energy storage that can be achieved not in hours, but literally minutes – if not seconds.

Ok, so what does this all mean?

Graphene is a very cool and an outstanding material. It’s used in (among other applications) flat screen TV’s, transistors, computers and electronics, structures which need a strong weight to strength ratio – i.e., airplanes, rockets, etc. – basically, a specialized material which has universal application but is not readily available to do so given the cost and effort. So now if this new process is indeed confirmed (and apparently it has been) then we now have a way to produce these items at even LOWER costs – and using technology that is outdated (guess there’s gold in thar used DVD players after all,…).

So we’re talking about flat screen TV prices going down even lower while the picture quality would be enhanced (the cost ratio being lower now means more consumers can afford the more advanced versions). In addition, with the anticipated introduction to the general consumer of roll out screens (imagine screens as thin as tinfoil: flexible and yet strong being made of grapheme) we’re now talking about a more advanced date of introduction as well as cheaper pricing. Like those yoga mats, people will soon be able to literally roll up and take their large screen TV with them for meetings, events or just for kicks). And now the costs for making computers will drop even lower while the costs of making stronger, safer airplanes – or even cars – is now more readily possible.

The list goes on, but there’s the other crucial and potentially even more explosive aspect of this lab accident.

If the initial indicators are any sign, the time it would take to recharge an electric car utilizing this technology would literally take minutes: the same amount of time to fill up a gas / fossil fueled powered motor. So rather than having to plug your (electric car) Tesla in for several hours for a full and complete charge, we’re now talking about mere minutes – or the time it takes to fuel up at the gas station. In addition, smaller batteries made of this material / process would be able to last days while being able to charge up much more rapidly. Think cell phones, computers or other applications using batteries and lasting for days – and not mere hours – you’ll start to get the idea of what this all means.

To be sure, there’s more work to be done, but this is a very good start.

This is big; whoever funds this is going to do very well on the patent rights alone.

It makes one wonder what gave them the idea of applying electricity to the material at hand, but then again, I’d attribute it to ‘what the hell let’s see what happens if we do this?’ attitude; the very same attitude which helped bring about a lot of other neat inventions.

Not bad for a lab accident, eh?

For more on this, read the research papers for yourself:

http://www.sciencemag.org/content/335/6074/1326

http://www.nature.com/ncomms/journal/v4/n2/full/ncomms2446.html

Things of the Past: Dying Sciences and Languages?

In a recent article, a new and disturbing (but given today’s economic climate, perhaps not surprising) trend appears: dying disciplines / sciences. Like languages which are disappearing as the world becomes more and more ‘cosmopolitan’ and ‘interconnected’, so too are discipline’s starting to fade away – and among them, the science of archeology.

In a recent article, a new and disturbing (but given today’s economic climate, perhaps not surprising) trend appears: dying disciplines / sciences. Like languages which are disappearing as the world becomes more and more ‘cosmopolitan’ and ‘interconnected’, so too are discipline’s starting to fade away – and among them, the science of archeology.

Leaving aside the loss of some pretty cool television shows on the Discovery or the Smithsonian channels, the potential loss of archeology has tremendous – and deeply disruptive – significance:

Combining insights from natural and social sciences, archaeology offers an exceptionally powerful way of understanding many of the most inscrutable aspects of our past – think of the difficulty of interpreting Stonehenge, for example, and what has now been achieved by this kind of sophisticated analysis. Archaeologists have plenty to tell us about the impact of climate change and fuel use, or the rise and decline of complex societies: they give us access, in other words, to a vast store of human experience, which is of direct relevance to some of the greatest challenges we now face.

When you think about, that’s pretty heavy stuff. What other discipline can you suggest combines various sciences – the sciences of weather, farming, energy, military to that of political, historical and economic / financial studies? And this work is not done solely within the realm of obscure theory or some computer-driven simulation: archeology is done in the here and now about the past (you literally have to get down and dirty in archeology).

Indiana Jones aside, Archeology is not some arcane branch of science: it’s about who we are and where are we going. In military terms, there is phrase ‘five by five’ – that is, confirming that you and your colleagues are going where you are intending to go: this is because within any spacial coordinate system you need to have five points in space – four corners and a middle point showing where you presently are with a line reaching out to where you’re going. This is what archeology is all about: you gotta have those points of where you’ve been to know where you’re going – and what’s likely ahead.

So what’s the big deal? Simple:

This situation reflects a key principle of the (Browne) review: that investment in higher education should be driven by student demand, informed by information about the price and quality of courses. Archaeological science is expensive, and does not attract research funding driven by the search for economic growth. Student numbers are low, nationally, and although student satisfaction measures and price put it on a par with history and English, archaeology departments cannot attract students in the same numbers, and are finding it hard to cover their costs.

In other words, what kind of career can one reasonably expect if they become an archeology graduate? In my own personal experience, an old family friend of ours has a son who studied at the University of Washington on a full scholarship in the field of Archeology (no slacker he!). But after a while, as his studies were coming to a close, career options were severely limited. So he took advantage of another option: he traveled to Hawaii and eventually become a battalion chief for one of the fire departments on the islands (mind, this including HALO training – High Altitude Low Opening (think skydiving at several miles high) – with members of a SEAL team, a six month tour of duty with the U.S. Coast Guard in the Bearing Sea along with a whole lot of other stuff).

Not bad for a PhD in archeology, eh? But that’s the point: he’s not an archeologist. And not to knock having a good battalion fire chief, we’re down one less archeologist.

And it’s not just about archeology:

Archaeology is not alone. ‘Hard’ or ‘small’ languages are also under pressure. They too, will struggle to make their way on the basis of research grants so that the national capacity in Russian, German and Portuguese are likely to decline. As with archaeology, a standard university response will probably be to reduce costs – by concentrating on language teaching, and reducing the provision in the politics, sociology, history or literature of those societies. We might expect more degrees in, say, politics with Russian language, emphasising accurate use of the language, and many fewer which emphasise cultural understanding in the fullest sense. While this may satisfy student demand, and allow universities to continue to prosper, it would represent a significant loss to our national research capacity and knowledge base.

So what’s the big deal? If we can’t speak these languages or know these sciences anyhow, what difference does it make?

A big difference.

We ultimately are the sum of what we know and by continual learning and expanding our knowledge base, we achieve greater capacity and a stronger ability to solve what’s thrown at us. It’s worth noting that during the Italian Renaissance, at the same time that folks were inventing double entry accounting and establishing the first real international banking houses, folks also made it a point to learn to sing, dance, speak different languages and conduct art among many other skills and talents. Understand: the ability to learn is in itself a skill best acquired through the study of many things – and in this competitive world, like that of the Renaissance, this is also a very competitive world (albeit during the Renaissance, one also learned how to handle a sword, daggers and gunpowder as it was standard to be routinely assaulted or daily face the possibility of assassination – something to keep in mind whenever you think of the Renaissance as a time of a bunch of fops and wimps wearing funny clothing painting neat stuff on ceilings).

Moving back to the present, it’s interesting to note during the 1980’s the demand for arabic speakers was very limited: due to budget cuts, there was little demand for arabic speakers, save for some oil / energy companies or obscure scholastic positions. In fact, the U.S. State Department had very fewer fluent arabic speakers in these years. Enter 9/11 and viola! The demand explodes. But we’ve been behind the curve for some time; had we a greater pool of arabic speakers, chances are we could’ve prevented a lot of bloodshed and chaos (not to mention attain a greater understanding and appreciation of the Arabic culture that could foster better future relations).

Bottom line is you can’t read a book unless you start at the beginning; it doesn’t work when you start in the middle. And it’s no different when you walk into a boardroom session, not know what’s been going on before you walk in, and start to give a speech without any sense of reference; in fact, it can be downright awkward.

In today’s world, we’re increasingly facing big and ugly challenges – climate changes, growing conflicts over diminishing resources, rising social unrest – the list goes on. We cannot dismiss the past on the assumption of ‘been there, done that’ as there are many challenges we face now that were similarly faced in the past. Knowing our past is little different from when a professional undergoes intensive training: sure, it may not be the real thing, but when the building starts to collapse or when the shit hits the fan, those who are trained and have a deeper knowledge base are far more likely to survive and overcome a situation.

As the old saying goes, ‘those who forget the past are doomed to repeat it‘.

But here’s another quote truly worth remembering and sharing:

We must welcome the future, remembering that soon it will be the past; and we must respect the past, remembering that it was once all that was humanly possible.

George Santayana has a good point.

For more about this subject, here’s a good article worth reading:

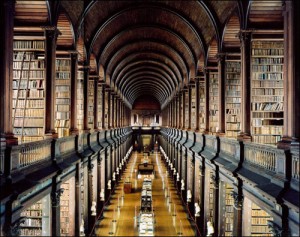

Libraries: The New Frontier

The times are a changing and among those changes are the notion of what makes a library a library – and how they are able to impact us more than ever before.

The times are a changing and among those changes are the notion of what makes a library a library – and how they are able to impact us more than ever before.

Libraries ain’t just about a place to do your school homework – and this is demonstrated in what’s taking place in Arizona:

Arizona State is planning in the next few months to roll out a network of co-working business incubators inside public libraries, starting with a pilot in the downtown Civic Center Library in Scottsdale. The university is calling the plan, ambitiously, the Alexandria Network.

Libraries as incubators?

Consider: it makes perfect sense. Where else can you go and draw upon resources to develop business plans, seek out possible funding sources, lay out building plans and/or system schematics, develop new potential business contacts / networks or enroll in job / skills training?

As the folks in Arizona explained:

One of the world’s first and most famous libraries, in Alexandria, Egypt, was frequently home some 2,000 years ago to the self-starters and self-employed of that era. “When you look back in history, they had philosophers and mathematicians and all sorts of folks who would get together and solve the problems of their time,” says Tracy Lea, the venture manager with Arizona State University’s economic development and community engagement arm. “We kind of look at it as the first template for the university. They had lecture halls, gathering spaces. They had co-working spaces.”

Makes perfect sense – in fact, why didn’t anyone see this before? Now, rather than just a place where one can go and read or check out some books, libraries are playing an ever more growing important role in today’s world. Libraries are now resource centers; places to go where folks can get the tools, resources and skill they need to start a business, learn a trade or develop a new product.

Libraries are invaluable engines of social and economic growth:

Libraries also provide a perfect venue to expand the concept of start-up accelerators beyond the renovated warehouses and stylish offices of “innovation districts.” They offer a more familiar entry-point for potential entrepreneurs less likely to walk into a traditional start-up incubator (or an ASU – Arizona State University – office, for that matter). Public libraries long ago democratized access to knowledge; now they could do the same in a start-up economy.

On a more practical level, what this also implies is that libraries now need to turn to other funding sources to meet their needs. As reported in the past, libraries nationwide are facing major cuts – with some local governments even shutting down their libraries outright (as was done in Camden, New Jersey nearly two years ago). With the new definition and roles of libraries, now would be a good time for librarians to come together and reach out to local / area businesses and obtain funding not through the usual means, but rather through grant funding from the federal Commerce Department, local Chambers of Commerce, various foundations and the like.

And given the well-established role of libraries, libraries offer a true cost-effective return on any investment – something for any funding entity to seriously consider.

Libraries are not just about books: they’re about information and the conveying and distributing of information in ways that are effective.

Libraries are now, more than ever before, invaluable community resources that could very well help establish and maintain economic and social development for the 21st century.

For more about what’s going on in Arizona, check out this link: http://www.theatlanticcities.com/jobs-and-economy/2013/02/why-libraries-should-be-next-great-startup-incubators/4733/

Good Education Creates Great Communities

Recently, I signed on as a volunteer Operations Officer for a rather remarkable school. The school is called CERN (Center for Educational Resource Network), and it’s based in what is arguably one of the most dangerous and economically depressed cities in the country – Camden. CERN’s neighborhood is rife with gang activity – among them, the Latin Kings and Bloods. It is also a city where it has one of the highest crime and homicide rates of any urban center, regardless of country or locality.

Recently, I signed on as a volunteer Operations Officer for a rather remarkable school. The school is called CERN (Center for Educational Resource Network), and it’s based in what is arguably one of the most dangerous and economically depressed cities in the country – Camden. CERN’s neighborhood is rife with gang activity – among them, the Latin Kings and Bloods. It is also a city where it has one of the highest crime and homicide rates of any urban center, regardless of country or locality.

And yet, standing in the CERN classrooms, you’ll find that there’s no shooting, no knifing, fighting, hollering or mayhem. Gang members from different associations sit in the same classrooms, doing their homework. Rather than violence, the rooms are abuzz with another sort of activity: students pounding away at their computer keyboards, reading their assignments, writing their essays or conversing with their colleagues as though it’s no big deal.

Like a real school should be.

“Here at CERN, we have a rule: ‘you don’t fight, you write'” as Angel Cordero, the Executive Director of CERN explained.

Founded in January 3, 2007, as a computer-based learning center serving between 20 to 30 high school level students, CERN has grown rather fast: in the past year it graduated over 400 students (and this apparently is a small year, as in prior graduations they’ve reached as high as over 800 students).

Leaving all this aside, what makes CERN stand out is its model approach: among other aspects of its programs, CERN works closely with job placement services and training schools; this is a very important point to consider – and here’s why.

There is a growing disconnect between what schools teach and what the workplace expects and needs. As reported in a McKinsey survey in December 2012 it was determined that only 42 percent of employers think students are prepared for work while 72 percent of educational institutions do. In another related recent GE survey, C-suite execs said linking schools with business was one of their top priorities.

To put it another way, 58 percent of employers do not think that schools are preparing students for the workplace while 72 percent of educational institutions think that they indeed are.

Can anyone say serious disconnect?

It begs one to ask: what is the purpose of all these conferences and seminars (with some in such cool locales as Hawaii – http://cait.hpu.edu/cait-staff-attend-google-apps-summit/)? Doesn’t anyone talk to each other? Apparently not – but with schools as CERN (and there are others as well) increasingly, the art of teaching is closely linked to the art of listening – i.e., listening to what employers need and want, what the students seek and are willing to learn – and making sure the two meet.

There are other schools out there that are making headway (and this despite the best efforts of some folks who are often critical or suspicious of these non-public school alternatives) but in CERN’s case, the Camden public school system is facing (conservatively) a drop out rate of approximately 60%: in other words, only 4 out of 10 students graduates from the Camden school system – and of those graduates, many still fail remedial reading and mathematical skills.

Mind you, the McKinsey report which raises these very issues of the disconnect between jobs and education didn’t just appear in some liberal-minded / soppy think tank: it came from that stalwart of capitalism, Forbes magazine. A good, solid education ain’t just soppy liberalism: it’s about having a good, strong solid american economy. Period.

And please, avoid couching this subject into the old, hackneyed argument of urban schools versus non-urban schools: it’s really all about the future as our nation’s labor force is going to increasingly draw upon those areas where schools are seriously lacking. Want to have a viable, taxpaying middle class in America? Think better schools because good education leads to stronger economic growth and social possibilities – and given that the workforce draw is going to rest increasingly upon those population segments traditionally lacking in education, you can kiss the American Dream goodbye: no middle class means a poor national future for us all and unless we recognize this, chances are we’re going to see – within our lifetimes – our nation fall from being a First World order to a Third World country.

Schools are ground zero for the future: we’d better make damned sure we’re meeting our goals otherwise everything goes to hell.

For more on CERN, check out the website: http://www.cerncamden.org

For more on the McKinsey report, check out this link: http://www.forbes.com/sites/joshbersin/2012/12/10/growing-gap-between-what-business-needs-and-what-education-provides/

Skating on Thin Ice: Technology, The Stock Market and The Law

One day back in 1987, a broker with a background in software decided to (literally) hook up his computer to that of a Nasdaq terminal, thus forever transforming the way Wall Street does business. So successful was this development that SEC regulators and other business entities were convinced that a bank of traders were working behind the scenes, and it wasn’t until later when the full power of computer trading became appreciated.

Fast forward to the present time: now, like onto Moore’s Law, computer trading is starting to hit some major walls.

Trading – whether in Wall Street, Singapore, the SSE Composite (China Stock Market) Russian, Turkish, the Borse – it’s about speed: hear the words being shouted out, watch the numbers fly and the trade slips go fast. And now with data processing, it’s much, much faster.

But how fast can fast go? It’s an inherently loaded question because just as firms seek the fastest and greatest processing speeds, the seconds are dwindling literally into multi-milliseconds. The ROI (Return On Investment) on such systems are increasingly becoming ludicrously stretched thin: how much would you be willing to spend for a system that shaves off .00001 of your trades? Some would argue that such cost savings are cumulative: a .00001 here and a .00001 there adds up to real time and money – right?

But it’s more than just speed: it’s also about algorithms. The trick now is to sense trends and trade patterns: seeing where a particular market segment is going – say, futures, pork bellies or commodities – and viola! you make your play. As the great Wayne Gretsky would say, “a winner does not skate to where the puck is, but rather to where the puck is going.”

But the inventor of the computer trading, one Thomas Peterffy, is now no longer sure. As he stated in an interview on NPR (http://www.npr.org/blogs/money/2012/08/21/159373388/episode-396-a-father-of-high-speed-trading-thinks-we-should-slow-down), gains from faster speeds are now uncertain and must be balanced against the inherent risks involved: faster trading means the potential for going into the wall at higher rates of speed – and far greater losses.

High speed trading does have its risks. And surprise! Regulation is not keeping up. But what is to be done? In a new paper, “Process, Responsibility and Myron’s Law”, an economist, Paul Romer, argues that we need to start paying attention to the dynamics of how new rules are developed and referred to Myron’s Law – that is, given enough time, any particular tax code will end up collecting zero revenue, as loopholes are discovered and exploited. Laws must adapt to changing conditions and tools, else they fail to do their duty.

Romer’s solution? Simple – and effective (and to be certain, rational business types will be open to its approach).

Keep it simple.

Unlike adapting the approach of, say, the Occupational Safety and Health Administration (OSHA) which has a painfully detailed and somewhat contradictory rule, 1926.1052(c)(3), about the height of stair-rails (as but one example) we compare this approach to that of the FAA (Federal Aviation Administration) which simply requires planes to be airworthy to the satisfaction of its inspectors. Romer argues financial regulations resemble the OHSA’s rule 1926.1052(c)(3) more closely than the FAA’s “airworthy” principle – and that this is a problem. With the addition of new technology (and it only promises to get more complicated as time goes on) financial regulations as they presently stand are not going to be truly effective.

Another case in point is that of GE (General Electric): using their dedicated staff of professional tax attorneys GE was, back around 2009, on the hook for federal taxes approximately $12,000 – and this from a firm worth billions. All perfectly legal.

As the Roman historian Tacitus said, “corrupt is the land with many laws” – the point being that the more laws that are on the books, the more likely you’re going to have loopholes and deals made to avoid those laws. The same will go for computer trading and the general stock market; unless these issues are addressed now it’s only going to get more sticky and complicated, with the ever-rising potential for crashes and financial failures which will only make the recent crash seem like a mere holiday in comparison.

See the puck go flying across the ice and into the stands,…

Whoo-Hoo! Beam me Up, Scotty!

Well, not quite, but as reported in Universe Today (http://www.universetoday.com/99604/dont-tell-bones-are-we-one-step-closer-to-beaming-up/) it’s becoming more and more a reality. Recent major advances in the field of teleportation have been made opening up new and interesting pathways to other things as well:

Well, not quite, but as reported in Universe Today (http://www.universetoday.com/99604/dont-tell-bones-are-we-one-step-closer-to-beaming-up/) it’s becoming more and more a reality. Recent major advances in the field of teleportation have been made opening up new and interesting pathways to other things as well:

While we’re still a very long way off from instantly transporting from ship to planet à la Star Trek, scientists are still relentlessly working on the type of quantum technologies that could one day make this sci-fi staple a possibility. Just recently, researchers at the University of Cambridge in the UK have reported ways to simplify the instantaneous transmission of quantum information using less “entanglement,” thereby making the process more efficient — as well as less error-prone.

In a paper titled “Generalized teleportation and entanglement recycling” Cambridge researchers Sergii Strelchuk, Michal Horodecki and Jonathan Oppenheim investigate a couple of previously developed protocols for quantum teleportation.

So what? Now we have a bunch of guys hanging around trying to make Star Trek a reality – right? Guess again:

“Teleportation lies at the very heart of quantum information theory, being the pivotal primitive in a variety of tasks. Teleportation protocols are a way of sending an unknown quantum state from one party to another using a resource in the form of an entangled state shared between two parties, Alice and Bob, in advance. First, Alice performs a measurement on the state she wants to teleport and her part of the resource state, then she communicates the classical information to Bob. He applies the unitary operation conditioned on that information to obtain the teleported state.” (Strelchuk et al.)

It’s more than just ‘beaming around” the universe; the theoretical nature of teleportation also lies at the heart of creating a quantum computer – and this is very big business (as we already discussed in an earlier blog, “The CIA and Jeff Bezos: Working Together For (Our?) / The Future” in which the CIA is working with Amazon’s founder and Chief executive, Jeff Bezos, on the development of the world’s first quantum computer).

So understanding how transporters could theoretically work also impacts the development of the next generation of advanced computers – and with that, artificial intelligence, computer networks, the nature of how goods and products are processed and distributed, etc., etc., etc.

You get the idea; it’s not just about a bunch of geeks and nerds working on abstract notions: we’re talking about potentially big money and tremendous economic impact(s).

So where does all of this leave us now? Actually, pretty far along. Considering the sheer amount of information that makes up the also-difficult-to-determine state of a single object (in the case of a human, even simplistically speaking, about 10^28 kilobytes worth of data – or 100,000,000,000,000,000,000,000,000,000) – you’re obviously going to want to keep the amount of entanglement at a minimum. As the gentlemen from Cambridge point out:

Of course, we’re not saying we can teleport red-shirted security officers anywhere yet.

Still, with a more efficient method to reduce — and even recycle — entanglement, Strelchuk and his team are bringing us a little closer to making quantum computing a reality. And it may very well take the power of a quantum computer to even make the physical teleportation of large-scale objects possible… once the technology becomes available.

Remember: when we speak of transporters / teleportation, we’re really talking about the transmission of information. Teleportation is not just about magic and sci-fi stuff: it’s about hard data processing and transmission: get this down pat and we’re well on the way to bigger and better things:

“We are very excited to show that recycling works in theory, and hope that it will find future applications in areas such as quantum computation,” said Strelchuk. “Building a quantum computer is one of the great challenges of modern physics, and it is hoped that the new teleportation protocol will lead to advances in this area.”

Chances are, we may yet see a true quantum computer in our lifetime – and maybe, just maybe, we’ll also be able to ‘beam’ around objects, but not people anytime soon; the amount of information a person could be represented is likely to be staggering: it is estimated, on average, that an individual contains over 3 trillion base pairs of genes, and when one speaks of cells and the information contained by the billion of cells within us – and given that each of us are unique in our own way – don’t expect to see a working teleportation device anytime soon.

But a personal home teleportation device for objects? That may be coming sooner than you think. Just like fax machines sending written correspondence via electronic means we may have our own personal transporters, sending holiday or birthday gifts directly to our loved ones….